This is an expanded version of the story that originally appeared in WIRED 17.12 and WIRED UK.

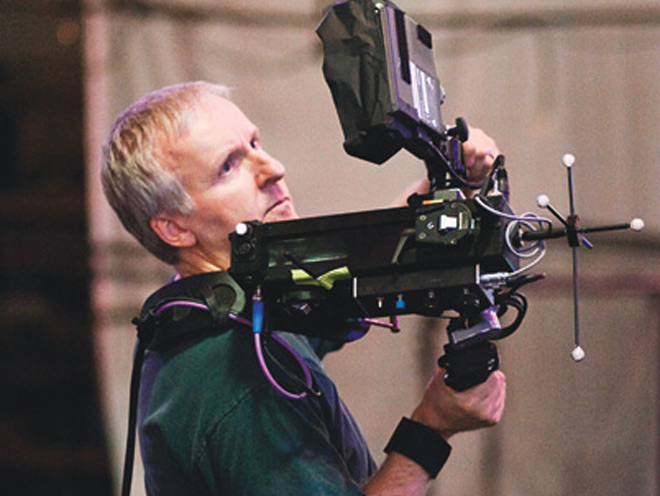

James Cameron (center, in the black T-shirt) on the set in Playa Vista

JAMES CAMERON IS STRIDING ACROSS A VAST SOUNDSTAGE in Playa Vista, an oceanside district of Los Angeles. This enormous, near-windowless building used to be part of Hughes Aircraft, turning out parts for fighter planes during World War II. Next door is the hangar where Howard Hughes built his “Spruce Goose,” the gargantuan flying boat that took so long to construct that the war was over before the craft made its one and only flight. Now it’s March 2008, 10 years almost to the day since the Titanic director took the stage at the Oscars, shook a gold statuette over his head, and notoriously declared himself “king of the world.” Like Hughes, Cameron has been toiling away for years on an epic project some feared would never reach completion: a $250 million spectacle called Avatar.

Even by Hollywood standards, Avatar is moviemaking on a colossal scale. A sci-fi fantasy about a paraplegic ex-Marine who goes on a virtual quest to another planet, it merges performance-capture (a souped-up version of motion-capture) with live action shot in 3-D using cameras invented by Cameron. If all goes according to plan, on December 18 Avatar will dissolve the boundary between audience and screen, reality and illusion — and change the way we watch movies forever. “Every film Jim has made has soared past the envelope into areas nobody even imagined,” says Jim Gianopulos, cochair of Fox, the studio behind both Titanic and Avatar. “It’s not enough for him to tell a story that has never been told. He has to show it in a way that has never been seen.”

Even by Hollywood standards, Avatar is moviemaking on a colossal scale. A sci-fi fantasy about a paraplegic ex-Marine who goes on a virtual quest to another planet, it merges performance-capture (a souped-up version of motion-capture) with live action shot in 3-D using cameras invented by Cameron. If all goes according to plan, on December 18 Avatar will dissolve the boundary between audience and screen, reality and illusion — and change the way we watch movies forever. “Every film Jim has made has soared past the envelope into areas nobody even imagined,” says Jim Gianopulos, cochair of Fox, the studio behind both Titanic and Avatar. “It’s not enough for him to tell a story that has never been told. He has to show it in a way that has never been seen.”

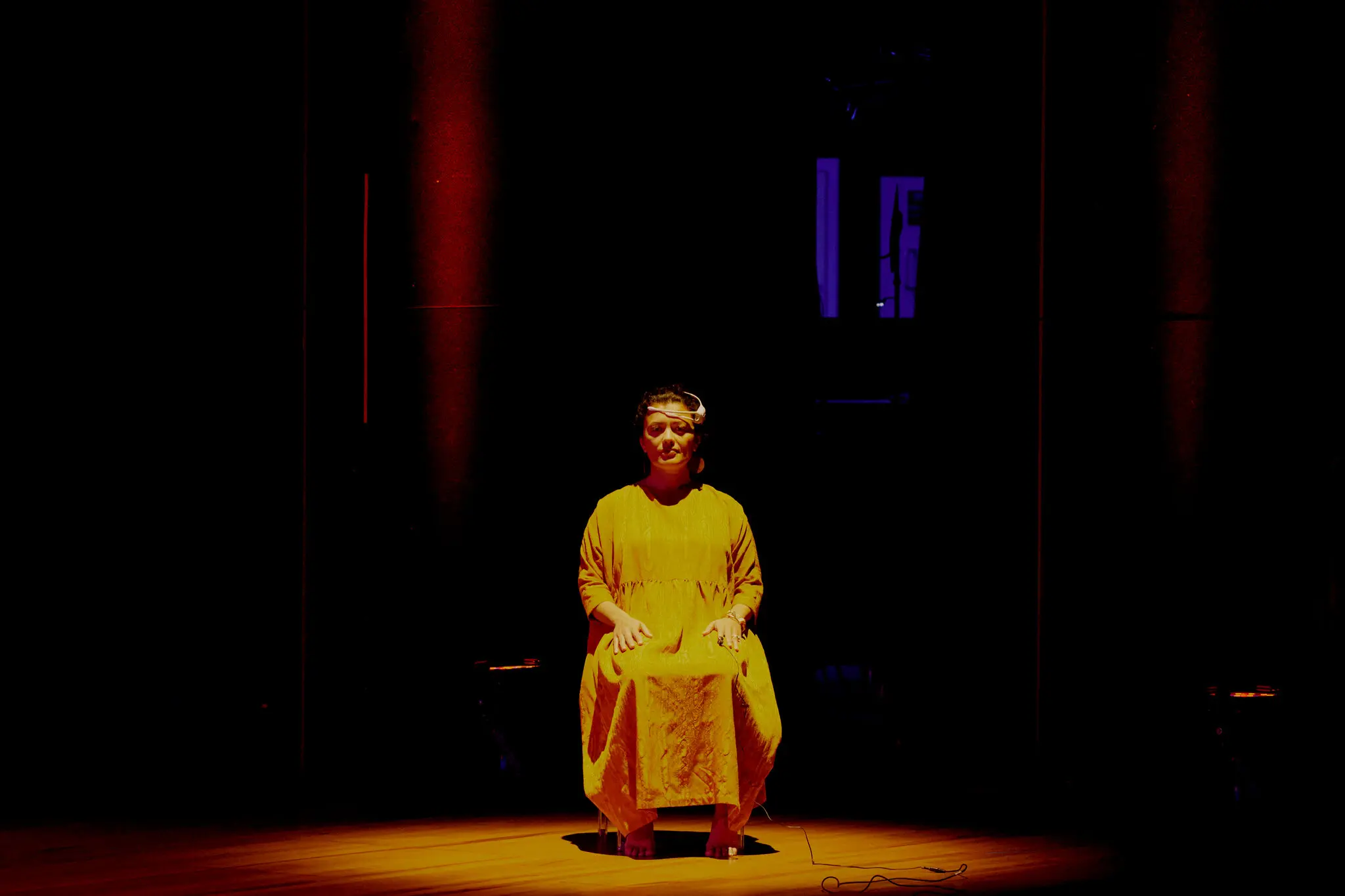

Today, Cameron and his crew are prepping the soundstage to record performance-capture data for a scene with the film’s two stars, Sam Worthington (last seen in Terminator: Salvation) and Zoe Saldana (Uhura in J.J. Abrams’s Star Trek). “Come on over here,” he calls out with a wave. “I’ll show you what this is.” Wearing worn jeans and a New Zealand Stunt Guild T-shirt, Cameron holds up a small flat-panel screen tricked out with multiple handles and knobs. This is his virtual camera, he explains, a custom-designed viewing system that enables him to see not what’s in front of him (a darkened soundstage) but the lush, computer-generated world that will appear in the film.

Several feet away, Worthington, who plays soldier-turned-humanoid-avatar Jake Sully, and Saldana, his alien love interest Neytiri, are standing around in black bodysuits dotted with roughly 80 metallic spots. Infrared cameras are strung across the ceiling to track these reflective markers, capturing the movements of the actors’ bodies. These same cameras register markers on the frame of Cameron’s screen as the director moves it about.

What’s truly remarkable here is what appears on his display. Looking into it, Cameron doesn’t see Worthington and Saldana on a soundstage. Instead, he sees Sully and Neytiri, each 10 feet tall with blue skin, catlike features, and long tails. The background is not a bunch of gray plywood risers but the deep rain forest of the planet Pandora, where most of this movie takes place. Cameron can view in real time what other directors have to wait months to see.

But that’s not all he can do. The director calls up a scene recorded yesterday, and suddenly the image switches to a fan lizard, a Cameron-invented flying creature unique to Pandora. Ugly at rest, it turns strangely beautiful when it lifts up its whirlygig wings and flies. As the reptile comes to pixelated life, Cameron begins to gyre across the soundstage, tilting the screen. “I can zoom out a little bit. I can follow it around the landscape as he’s flying. Come underneath, come up on top if I want — see how it flies over the terrain.” It’s as if he were shooting with an actual camera — which is exactly the point. “I can’t operate a camera with a fucking mouse,” he says. “It’s ridiculous. It’s why CG camera movements look computer-generated.”

In the scene that Cameron is about to shoot, Jake and Neytiri are leaping through the jungle chasing fan lizards. As an avatar—a human inhabiting the body of a Na’vi, as Pandora’s indigenous humanoids are called—Jake is here to help Earth’s mechanized corporate armies strip the place of its natural resources. Avatars are supposed to be Earth’s goodwill ambassadors—but the Na’vi aren’t buying it. “They take one look at what we’re about and say, no—they tear the place up, they wreck the forest, they are poison,” Cameron explains. “So our guy Jake arrives in a very sour situation.” Yet Neytiri has just saved him from an enormous, charging thanator—another Cameron species, this one named after the Greek god of death—and a vicious pack of razor-toothed viperwolves. Now Jake and Neytiri are getting to know one another. The script calls for the two of them to stare in wonderment at the reptiles, represented on the set by some dots on the ends of a half-dozen skinny wooden poles that crew members are waving about. Setting aside his virtual camera, Cameron grabs a stick and — for the moment anyway — joins the fun.

EIGHTEEN MONTHS EARLIER I’d stood next to Cameron on another soundstage as he discussed the challenge he was about to take on with Avatar. We were in Montreal, on the set of Journey 3-D, Eric Brevig’s Journey to the Center of the Earth remake, the first feature film to employ the stereoscopic camera system Cameron had invented with Vince Pace. And although he hadn’t yet fully committed to Avatar, he was clearly leaning in that direction.

Cameron geeked out that day in Montreal when we were discussing the intricacies of shooting in 3-D, but he wasn’t ready to talk about Avatar. “I don’t want to say too much about it,” he’d said when I asked him about the project most people expected to be his next blockbuster. “It’s a science-fiction film. I would call it an old-school action adventure in a very exotic environment, an Edgar Rice Burroughs-type manly adventure film with maybe a little bit of Heart of Darkness thrown in. It’s an epic. It’s an entire world that’s got its own ground rules, its own ecology. And even though it’s not a sword-and-sorcery-type fantasy, it’s like The Lord of the Rings in that it’s immersive. It’s the kind of thing that as a geek fan, you can hurl yourself into.

“I think the role of that type of film should be to create a kind of fractal-like complexity,” he continued. “The casual viewer can enjoy it and not have to drill down to the secondary and tertiary levels of detail. But for a real fan, you go in an order of magnitude and, boom! There’s a whole set of new patterns. You can step in in powers of ten as many times as you want and it still holds up. So I’ve created enormous notes. I’ve created a bible for the whole world. All the creatures are thought out in terms of their life cycle. But you don’t need to know all that stuff to enjoy it—it’s just there if you want it.”

Now, on the Playa Vista soundstage, Cameron and crew are conjuring up this world.

WHILE CAMERON AND THE ACTORS cavort around the set in Playa Vista, a bunch of technicians are sitting at the far end of the room, pulling the digital strings. This is Avatar’s “brain bar,” three bleacher-like tiers of computer monitors manned by 20 or so people: video specialists on the first level, motion-capture technicians from Giant Studios and real-time CG operators from Cameron’s Lightstorm Entertainment on the second, and visual-effects supervisors and facial-capture experts from Peter Jackson’s Weta Digital up top.

On the monitors, the brain-bar crew is watching various iterations of the fan lizard scene as it’s being captured down below. One screen maps skeletal data points on a grid. Others toggle from Worthington and Saldana on the risers to their animated characters in the jungle.

The brain bar was built to accommodate Avatar’s digital production pipeline. Several months before, Cameron wrapped the bulk of the film’s hi-def 3-D shots in New Zealand, most of them against a greenscreen. Now, thanks to a nifty bit of software called a simulcam, the production team can mix performance-capture from Playa Vista, live-action footage from New Zealand, and computer-generated backdrops to conjure up a real-time composite for Cameron to see immediately. It compresses into seconds a job that would normally take weeks. On the fly, the brain bar can transmute hundreds of gigabytes of raw data into a rough approximation of the fantastic universe that, for the past 15 years, has existed only in Cameron’s mind. “You can call it what you want,” says Glenn Derry, Avatar’s virtual production supervisor, “but it’s really just a big database.”

For Cameron, the brain bar is the ultimate control mechanism. “It started with Jim going, ‘What if I could do this?’” says Richie Baneham, the production’s animation director. But beyond that, there was no master plan. “It just kind of evolved,” Derry says. “We kept adding features and adding features, and then we got to the point where we couldn’t keep track of them all.” He laughs, a little too wildly. “We’ve been doing this for two years! But we’re so far ahead of the curve. Now we bring in people from outside and they go, ‘Whoa!’”

Avatar’s animation director, Richie Baneham (left), and virtual production supervisor, Glenn Derry, created a real-time pre-visualization system which let Cameron direct performance-capture shots while viewing on-the-fly composites of the final scene.

The foundation of Derry’s virtual production system is MotionBuilder, a 3-D character-animation program that’s been used on everything from Rock Band to Beowulf. Giant Studios was brought in for its real-time “solve” — that is, the ability to interpret performance-capture data in real time. They ended up with a highly evolved form of pre-visualization, a computer-animation technique designed to show what a scene might look like with live-action sequences and CGI together.

When Derry started on Avatar in 2005, Steven Spielberg and Industrial Light & Magic were pushing the limits on pre-viz for War of the Worlds. As Spielberg was shooting an action scene, he knew where the alien war machine would go when ILM rendered it months later. But he couldn’t actually see the tripod crashing into cars and buildings during his shoot — which is what you’d get with Cameron’s virtual camera and simulcam setup.

Meanwhile, Baneham had to figure out how to overcome the “uncanny valley,” that unnerving gulf between human and not-quite-human that made the performance-capture figures in Robert Zemeckis’s Beowulf and Polar Express feel creepy. Zemeckis, Cameron says, “used the same marker system we use for the body and captured the facial performance with those mo-cap cameras. Really a bonehead idea.” Baneham’s solution required the production crew to make a plaster cast of each performer’s head, then build a custom-fitted harness to hold a tiny videocam that would be aimed back at the actor’s face from just a few inches away. The wide-angle lenses would record every twitch, every blink, every frown.

But it would take more than that to make Avatar look real. Before he joined this production, Baneham led the effort to bring Gollum to life in The Lord of the Rings. At that time, when digital animators wanted to render a smile they would just dial the mouth a little wider, which invariably looked phony. To make Gollum so expressive, Baneham and his team studied physiology: What muscles fire to produce a smile, and in what order? How much light does the skin absorb, and how much does it reflect? How deep is the pupil, the opening at the center of the eye? The facial details that made Gollum so convincing were not provided by performance-capture but by painstaking, frame-by-frame CG work. Avatar’s system streamlines that process: Animators can work from the head-rig videos, which supply a complete visual record they can map to each character’s face.

Even as Derry was reinventing virtual production and Baneham was rethinking facial animation, Cameron was throwing out the rules of shooting 3-D. With camera designer Vincent Pace, he developed a system with twin lenses that could mimic human vision. Wearing polarized glasses, viewers would get the same 3-D effect they do with CG films like last summer’s Monsters vs. Aliens or the forthcoming Toy Story 3. But shooting live-action 3-D is more complicated than rendering computer animation in 3-D.

The conventional method relies on a series of cumbersome mathematical formulas designed to preserve the “screen plane,” the surface on which the movie appears. In 2-D that’s the screen itself, but in 3-D it’s an imaginary point somewhere in front of you. “The viewer doesn’t think there’s a screen plane,” Cameron explains. “There’s only a perceptual window, and that perceptual window exists somewhere around arm’s length from us. That’s why I say everything that’s ever been written about stereography is completely wrong.”

To Cameron, eliminating the screen plane is crucial. “The screen plane has always been this subconscious barrier,” says Jon Landau, his longtime producer. By removing it, Cameron hopes to create an all-encompassing cinematic experience. That’s the ultimate goal, the reason for the virtual camera and the simulcam, the hi-def head rigs, the 3-D camera system: total immersion. “This is not just a movie,” Landau says. “It’s a world. The film industry has not created an original universe since Star Wars. When one comes along so seldom, you want to realize it to the fullest possible extent.”

That’s one reason to do all this. The other reason is more personal. “I’ve made a bunch of movies, won a bunch of awards, made a bunch of money,” Cameron declares. “None of those are interesting to me. They never were. It was never about the awards, never about the money, only about the films. But what’s challenging? I look for the new thing. And with this film, we’ve really loaded it up.” ♦

Five Steps to Avatar

To film the alien planet Pandora, James Cameron and his team reinvented movie-making, from the camera to the shooting to the rendering. Here’s how.

1. Reinvent the 3-D camera.

In 2000, Sony agrees to help Cameron build his “holy grail” camera system. Over the next few years, he develops a lightweight, dual-lens, hi-def digital camera capable of shooting precisely calibrated 3-D images that won’t give viewers a headache. The new equipment is used to film Avatar’s live-action sequences.

2. Frame the shot.

To create a precise template for the computer-generated sequences, actors first perform scenes in the soundstage in Playa Vista. Cameron views the action through a virtual camera — an LCD screen that shows the actors as 10-foot-tall aliens inhabiting Pandora’s lush environment. This system allows Cameron to position performers and direct action while seeing a real-time simulation of the finished product.

3. Capture the action.

The actors wear bodysuits dotted with small reflectors. LEDs shoot near-infrared light into the room while up to 140 digital cameras track the reflections. The data is fed into a system that correlates the reflections with the actors’ movements. As the actors move around, the system creates a 3-D record of the scene. Later it’s mapped onto the digital rendering, making the CG sequences appear realistic.

4. Record the faces.

Each actor wears a head rig that holds a tiny high-definition video camera a few inches away from their face. The camera’s wide-angle lens records every subtle facial twitch, every blink, every curl of the lip. All this data is then mapped onto the actor’s computer-generated face.

5. Choreograph the camera work.

After the performances are captured, Cameron returns to the soundstage, now empty of actors. Techs cue up the performances one by one as Cameron uses his virtual camera to choreograph the camera moves — tracking shots, dolly shots, crane shots, pans. The movements are tracked by the same system that records the actors. Cameron’s work is then incorporated into the rendering system so his every directing decision is reflected in the finished product.

December 1, 2009

December 1, 2009