Excerpted from Into the Heart of the Mind: An American Quest for Artificial Intelligence (Harper & Row, 1984), a book that explores the ramifications of A.I. by documenting the efforts of a small team of researchers at the University of California, Berkeley. “Machine learning” was a thing of the future; like other A.I. researchers at the time, they had to program the computer with everything it knew. For a contrasting perspective on this approach, see “The Black Knight of A.I.,” a profile of the philosopher Hubert Dreyfus, adapted from Into the Heart of the Mind and published in Science 85 magazine.

Illustration by Deborah Meyers

EVANS HALL IS A BUILDING with a powerful presence: a temple of mathematics and computer science in the form of a massive concrete bunker bigger than any other academic building on the University of California’ s Berkeley campus. It can be seen from miles away, its immense bulk resting solid against the hills. Closer up it recedes into the background, becomes the background, an all-encompassing entity approached across a featureless plaza that leads to a series of blank glass doors. To enter it is to step into the world of the number and its machine: chilly, gray, exciting, the future.

Deep in the interior of Evans is the fourth-floor machine room, a cold, windowless space that’s crowded with computers and humming with the vibrations of electronic data being shuffled and filed. The machines are arranged in rows, row after row of trim metal boxes, cables coiled beside them like snakes. The first row on the right is a VAX 11/780 superminicomputer named Kim—Kim No-VAX, so-called because a faculty member was planning to alter its hardware so that it would no longer function as a VAX.

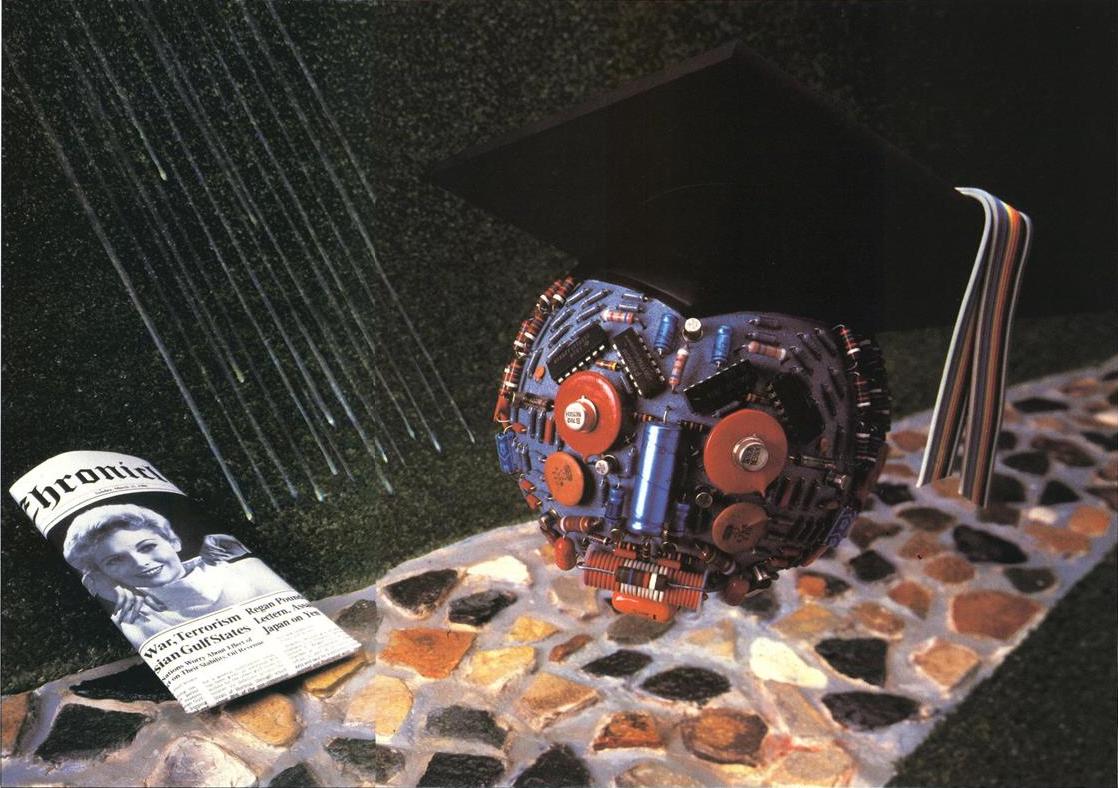

Kim is a two-tone machine, pale-tan body with a light-blue top. On a front panel it wears a tiny newspaper photograph of its namesake, the blonde bombshell of the fifties. In the photograph, Kim the actress appears open-mouthed, sultry, expectant. Kim the machine gives no such clues as to the mysteries within.

ONE OF THE PEOPLE with an interest in Kim is a young computer science professor named Robert Wilensky. Wilensky’s specialty is artificial intelligence. A.I. is an experimental branch of computer science whose goal is to get computers to think—not just to manipulate data, which is what computers have done from the start, but to understand and remember and form judgments and reason. To do, in short, what humans do.

Most of the work involved is actually a highly esoteric form of computer programming. In theory, a computer can be programmed to do anything that can be expressed in a precise and unambiguous set of instructions—an algorithm. It can do anything you can program it to do; without a program, it can do nothing at all. A.I. researchers try to discover the algorithms for intelligent behavior and then write programs that will enable a computer to exhibit it.

It’s a fantastical undertaking, and one that raises a number of questions: Can a computer’s electronic circuitry actually think the way a living brain does? If so, would that make the computer a conscious, sentient being? And what would be the consequences of that? Could a silicon-based intelligence turn out to be, as several scientists have predicted, the next step in the evolutionary ladder? And if so, how do we know that a new race of vastly more intelligent machines would act in our interest?

But these are not questions A.I. researchers often ask. Their concerns are more pragmatic: What is intelligence, anyway? What are the mechanisms that produce it? How can those mechanisms be duplicated in an electronic computer?

I felt as if I’d entered some sort of bizarre computational netherworld where the Promethean and the quixotic had somehow merged.

“Welcome to A.I.,” Wilensky said with a smile.

In universities and research labs around the world, a handful of computer pioneers have been puzzling over the answers to these questions. At Yale they’ve been trying to program computers to learn. At Stanford and MIT they’ve been trying to get them to see. At Stanford, too, and at corporations like IBM and Xerox, they’ve been producing “expert systems”—intelligent assistants that qualify as experts in highly specialized fields such as medicine or electronics. And in Tokyo they’ve started on the most ambitious project of all—the ten-year, $500-million effort to produce a fifth-generation computer, a machine so advanced it will have intelligence built in.

At Berkeley, Wilensky and his small team of student researchers were focusing on two tiny pieces of the puzzle: the areas known as natural-language processing (that is, understanding human languages, such as English) and commonsense reasoning. They were building two natural-language programs, one to translate normal English sentences into an internal “meaning representation,” one to translate the meaning representation back into English—or Spanish or Chinese. They were also building two commonsense reasoning programs, PAMELA and PANDORA.

PAMELA was a program that understood stories: if you typed one in, it could figure out what had happened. It was based on the program Wilensky had done as a student, a program called PAM—Plan-Applier Mechanism, so called because its abilities depended on its knowledge of people’s goals and plans. PANDORA—the acronym stood for Plan ANalysis with Dynamic Organization, Revision, and Application—was a logical extension of this, a program that used the same knowledge of goals and plans to make plans of its own. It was being built by Joe Faletti, the most senior of the six Ph.D. candidates on Wilensky’s research team, and its area of expertise was the everyday world.

The first task PANDORA was assigned was to come up with a plan for picking up a newspaper in the rain. Faletti had programmed it to believe it was a person who’d just awakened and wanted to know what was going on in the world. It knew there was a newspaper on the front lawn, and it noticed that it was raining outside, and it knew it wanted to stay dry, so it needed to come up with a plan for getting the paper without getting wet. Faletti’s idea was that it should decide to put on a raincoat.

For a human, this is pretty trivial stuff. For a computer, it’s not. Fetching an object in the rain requires an incredible amount of knowledge—knowledge about rain and raincoats, outdoors and indoors, being dry and being wet. Humans learn these things gradually, from infancy on. Computers come into the world full-grown and ignorant. They don’t even know enough to come in out of the rain. But even so, I couldn’t deny an almost dizzying sense of absurdity when I first heard about PANDORA. I felt as if I’d entered some sort of bizarre computational netherworld where the Promethean and the quixotic had somehow merged.

“Welcome to A.I.,” Wilensky said with a smile.

JOE FALETTI HAD NOT STARTED OUT to teach a computer to put on its raincoat. He’d started out, some twelve years before, studying math and English as an undergraduate at Berkeley. He had come to this only by accident.

Faletti is a bearded young man who grew up in Antioch, an industrial town at the mouth of California’s bleak inland delta. As a grad student he went into computer science. For five years he explored various avenues of study, A.I. among them. During this period he joined an outfit known as the Frames Group, after a theory that MIT’s Marvin Minsky—a luminary of artificial intelligence—had come up with in 1974.

One of the chief problems—maybe the chief problem—in artificial intelligence is the question of knowledge representation: how to represent knowledge in a computer program so that it can be accessed on demand. This happens automatically in humans: we don’t have to think about how to store what we know about raincoats, say; we either store it or we don’t. With computers it’s different. So Minsky introduced the organizational method of storing knowledge in “frames”—data structures full of details that can be added or taken out at will.

The Frames Group at Berkeley was an informal discussion club whose dozen or so members got together weekly to discuss what was happening in the world of A.I. It was Faletti who insisted they start out every week by watching Mork & Mindy, the sitcom starring Robin Williams as an extraterrestrial, for tips. “It was great fun,” he told me. “Here you had this character who knew very little about anything. He had all these high-level reasoning powers but not the knowledge, and he had all these preconceptions that were just completely different from Earth people’s. He considered an egg to be already a living being, so at one point they had him throwing an egg up and saying ‘Fly, little bird, fly!’ So you could analyze the jokes he made and see what processes were failing and why.”

After a while, Mork learned too much about Earth and the A.I. aficionados of the Frames Group lost interest. About the time that happened, however, Wilensky came to Berkeley and started setting up an A.I. lab. Before long he and Faletti began talking about research projects the latter might undertake. Faletti said he’d like to do some natural-language work, but that he might like to have a little problem-solving stuff in there too.

Wilensky suggested a program that made its own plans, and for the next six weeks they met weekly to discuss hypothetical situations such a program might deal with. Say you want to watch a football game on TV but you have a paper due the next day. Faletti didn’t see much of a goal conflict there—he’s capable of writing a paper while watching television. But what if, instead of having to write a paper, you discovered your mother was in the hospital recovering from an emergency appendectomy? The solution, assuming your mother likes football, would be to realize that there are TV sets in most hospital rooms and that you could watch football and visit your mother at the same time.

Does Faletti’s mother like football?

“No,” he said. “I don’t even like football.”

After getting the go-ahead to begin his research, Faletti got distracted for a while teaching undergraduate courses and writing a new computer language for Wilensky that would make it easier to get PANDORA to work. With the beginning of the winter quarter, however, he returned to PANDORA full time. His immediate goal was to get it working in time to meet the April 15 deadline for papers for the conference that AAAI—the American Association for Artificial Intelligence—was holding that summer in Pittsburgh. His long-term goal was considerably more ambitious. “As a problem-solver,” he said, “PANDORA is supposed to be the controller of all conscious thought. But if PANDORA puts her raincoat on this week, I’ll be a lot happier.”

AT THE TIME, THE PURSUIT of artificial intelligence was barely a quarter century old. It had begun in 1956, when a Dartmouth mathematics professor named John McCarthy and a Harvard fellow named Marvin Minsky, along with an information specialist from IBM and an information specialist from AT&T, put together a ten-man summer conference to discuss the almost unheard-of idea that a machine could simulate intelligence. It was then that the term “artificial intelligence” was adopted, and it was then that the first genuinely intelligent computer program was demonstrated—the Logic Theorist of Allen Newell and Herbert Simon, two researchers associated with Carnegie Tech (now Carnegie-Mellon University). These four men—Minsky, McCarthy, Newell, and Simon—directed most of the significant A.I. research in the United States for the next twenty years, and the schools they settled at—MIT, Stanford, and Carnegie-Mellon—continue to dominate the field today.

At Berkeley, computer science was for years dominated by theorists, brilliant individuals who’d made a name for themselves in pursuit of the fundamental limits of computation. People in theory are interested in the deep philosophical questions of mathematics. For them, the computer is simply a tool. They tend not to care much about such frivolous concerns as whether it can think.

Could a silicon-based intelligence turn out to be, as several scientists have predicted, the next step in the evolutionary ladder?

In the late seventies, however, some of the theory people at Berkeley decided that the field of A.I. was becoming too big to ignore. They formed a search committee to find someone who would make it happen. They were looking for a brand-new Ph.D. who could operate on his own—who could get funding and form a research group and build a whole operation from scratch. They were looking for a self-starter. Robert Wilensky came out at the top of their list.

As a grad student at Yale, Wilensky had studied under Roger Schank, the enfant terrible of artificial intelligence. Schank is controversial for a number of reasons, not the least of which is his insistence on the importance of language. Most of the early work in A.I. was done by mathematicians, and they concentrated on getting computers to perform sophisticated intellectual tasks—playing chess, solving complex puzzles, proving mathematical theorems. Unfortunately, their work only proved that it’s possible to be extremely smart in these areas and still be quite stupid about the world. What’s really important about intelligence, people gradually came to realize, is not our highly developed powers of logic but our ability to communicate and use common sense—which is why a recent article in Scientific American concluded that these are two of the greatest challenges facing A.I. researchers today.

ON A CHILLY DAY IN MARCH, in the cubbyhole that was the A.I. office, Joe Faletti was seated at a computer terminal running PANDORA on Kim. He tapped a key on the keyboard and the screen filled up with lists of words surrounded by parentheses. That was LISP—LISt Processing, the computer language developed by John McCarthy in 1958 and still used by most A.I. programmers today. The lists told PANDORA that it was Time Morning and that PANDORA was a person who wanted to know what was going on in the world. They also told it that the weather was Condition Raining. This caused a “demon” known as WatchAts to appear on the screen.

“A demon, ” Faletti explained, “is a process that you want to be waiting in the background. It watches everything that happens, and when you get the conditions that say it’s appropriate for it to run, it runs. If it’s raining and you hear someone’s going outside, you need to know they’re going to get wet—and you need to know that automatically. You don’t want to have to think, ‘Okay, I’m going outside—does that mean I’m going to get wet?’”

As he spoke, still more lists scrolled across the screen.

“This says that the normal plan for knowing what’s going on in the world is to go outside, pick up the newspaper, and go back inside,” he continued. “Now what I want to do is simulate that plan to figure out whether it’s going to work or not. The way I simulate it is to assert its effects to see if they’re okay. That’s one way of noticing that you’re going to get wet, because an effect of going outside is that you’ll be outside, and being outside when it’s raining is where the inference comes in.”

How do you organize knowledge about raincoats and umbrellas and dogs and running in a way that all of it can be accessed when you wake up in the morning?

By that time, PANDORA was already asserting the effects of its actions. Faletti began to look excited. “See, when it asserts that the effects of going outside are that it’s going to be outside, this demon RainCheck says, ‘Hey, wait a minute! You’re going to be outside, and that means I should watch for dryness!’ And then WatchDryness says, ‘Oops! You’re going to be soaking!’ That creates a goal conflict between the PTrans—going outside—and wanting to be dry. So we invoke Resolve-Goal-Conflict.

“Now we have this goal of resolving the goal conflict. Normal plan for that is to put on raincoat. So we assert the effects of putting on a raincoat, which I haven’t put in, and we assert the effects of PTransing outside, and—”

Suddenly he burst out laughing. “This doesn’t work because it doesn’t know that having the raincoat on means it won’t get wet. I haven’t gotten that far yet. See up here? It’s asserting the effects of the plan, and it says the effects of PutOn is nil and the effects of this PTrans is it’s going outside, and then it runs the demon that says, ‘Oh, it’s raining, you’re going to get wet!’ So it keeps saying ‘Oh! Got to put a raincoat on!’—over and over again.”

IN COMPUTER TERMS, WHAT HAD HAPPENED TO PANDORA was that it had fallen into an infinite loop. If allowed to continue, it would have kept putting on its raincoat endlessly, never realizing that it had resolved its conflicting goals, or that it had any choice but to keep repeating the action it had been programmed to take. In fact, it didn’t have any choice. All PANDORA knew about the world was what it had been programmed to know, and in the primitive state of its programming, a crucial piece of information had been left out: raincoats keep you dry. This left it locked in a pattern of endless repetition. It put on a raincoat because that was the normal plan for going out in the rain, but it didn’t know the raincoat would keep it dry; so it put on a raincoat because it was the normal plan for going out in the rain, but it didn’t know the raincoat would keep it dry; so it . . . and so on. It’s a problem no human will ever face.

Loops like these occur in computer programs because loops are what most programs are made up of—not infinite loops, from which there’s no escape, but more useful kinds of loops that keep the program pointed in the right direction. PANDORA itself is nothing more than a long sequence of looped instructions. If any events occur, it’s instructed to deal with them; if any goals pop up, it’s instructed to plan for them; and if any plans are made, it’s instructed to execute them. It keeps on doing this until all events have been processed, all goals planned for, and all plans executed. Then, when there’s nothing left to do, it stops— unless it slips into an infinite loop that prevents it from completing the series.

In this case, the unwelcome loop could be fixed by filling in the “Effects” slot in the raincoat plan. But while that would be fine for raincoats, what would happen when PANDORA learned about other ways of staying dry—umbrellas, say? Things could get complicated rather quickly, and Faletti didn’t want PANDORA to stay at the raincoat stage forever.

“My first goal is to get PANDORA to put on a raincoat,” he explained. “After that, if it doesn’t have a raincoat, I want it to think of going out and buying a raincoat—and then it has to realize that if it does that, it’ll get wet. Hopefully it’ll eventually get to the point where it decides to send the dog out, or else run. But to do that it has to realize that getting wet is okay if you don’t get too wet.” He grinned. “Maybe it’ll use the newspaper on the way back to cover its head.”

TO COME UP WITH SOLUTIONS LIKE THESE, PANDORA would need a far more sophisticated knowledge base than it had now. The problem was one of representation. At the moment, PANDORA’S knowledge base consisted of nothing more than a few slot-fillers and some inference rules—that is, a few facts arranged fill-in-the-blank style and some rules that said, “If this, then do that. ” But if it were to deal with anything more complicated than a raincoat, PANDORA would need a much more efficient memory. How would you set one up? Joe wasn’t sure, but he was working on the problem with Peter Norvig, the student who was building PAMELA.

The solution they came up with was to store the knowledge in frames. They spent several weeks setting up the frames and designing a mechanism that would invoke the right frame in the right order. Then they installed this mechanism in the two sister programs, PAMELA and PANDORA—the story-understander and the common-sense planner. And so, on the second day of spring quarter, Faletti was once again struggling to get PANDORA to put on its raincoat. He didn’t have much time. The AAAI deadline was almost a week away and he still had to write his paper. He headed straight for his desk. Within seconds he’d logged into Kim and loaded the PANDORA program. He was a bit tense. This would be PANDORA’S trial run.

He typed in the NextEvent command and started PANDORA on its loop. The screen before him filled up with green lettering. He nodded approvingly as the lettering scrolled before him. Everything was going according to plan. The TimeOfDay was Time Morning and the Weather was Condition Raining. Without hesitation, PANDORA found its normal plan. It had to PTrans to Location Outside. It had to Grasp the Object Newspaper. It had to PTrans to Location Inside.

Faletti stared at the screen with the horrified expression of a man who has just watched his child spout gibberish and vomit green foam. After all this, PANDORA had gotten it backward.

Next it went through the motions in simulation mode. As soon as it got to the first step, a new goal went into its goal stack. It was Resolve-Goal-Conflict: having automatically detected the conflict between its original goal of PTransing to Location Outside and its built-in Preserve Dryness goal, it now had the new goal of resolving the conflict between them. It waited for the next command.

Up until this point, all PANDORA had done was to find the appropriate plans and run through them in its “mind.” Then it put them in its FuturePlans stack. Now it was ready to through that stack and carry them out—to put on its raincoat and pick up the paper. Of course, it wasn’t really going to do anything of the sort. It was only a computer program—a sequence of commands in Franz Lisp, Opus 38, plus PEARL; a string of zeros and ones that had been arbitrarily divided into thirty-two-bit “words”; a series of positive and negative impulses that had been read off a whirring magnetic disk and and were now zipping through the circuitry of Kim No-VAX in the chilly isolation of the fourth-floor machine room. But PANDORA didn’t know that. It didn’t know anything except what Faletti had programmed it to know, and the only thing Faletti had programmed it to know was what to do in the morning when it’s raining.

Faletti typed in “Go.” On cue, PANDORA found the normal plan for resolving its goal conflict. The plan was to PutOn its Object Raincoat. It simulated this to anticipate its effects and then put it at the top of the plan stack. Now it was ready to execute its plans.

Faletti typed in the “Go” command again. More writing scrolled across the screen. As it did so, a stricken look passed across his face. For some reason, PANDORA had PutOn its raincoat and then decided to PTrans inside. As Faletti looked on, it continued to execute the remainder of its plan, grasping the newspaper and then PTransing outside. He stared at the screen with the horrified expression of a man who has just watched his child spout gibberish and vomit green foam. After all this, PANDORA had gotten it backward. It had gone inside to pick up the newspaper, and then it had gone out in the rain to read it. Faletti let out a low moan and turned toward the window.

July 1, 1984

July 1, 1984