Adapted from Into the Heart of the Mind: An American Quest for Artificial Intelligence (Harper & Row, 1984). For a very different perspective on AI in the eighties, see “The PANDORA Project,” excerpted from Into the Heart of the Mind and published in Esquire.

About the publication: Science 85 was the 1985 iteration of the magazine published by the American Association for the Advancement of Science as a popular counterpart to Science, the organization’s prestigious journal. The first issue was published in December 1979 as Science 80; the final issues were known as Science 86. At that point the magazine was purchased by Time Inc., which folded it into Discover.

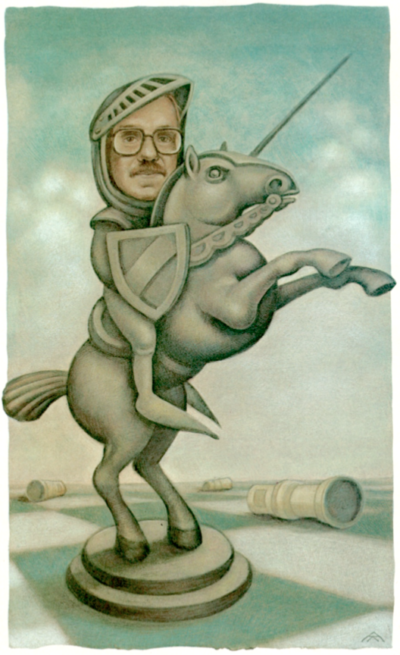

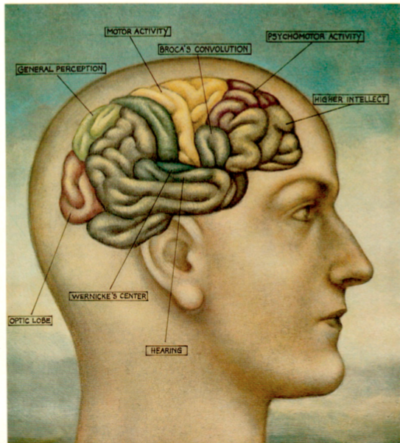

Illustrations by Anita Kunz

HUBERT DREYFUS LEANED BACK in his desk chair, planted his feet on an open drawer, and dug in his heels. “The way people are misled about artificial intelligence,” he declared, “is by scientists who say, Pretty soon computers will be smarter than we are, and then we’ll have to worry about how to control them.”

For two decades now, the feisty, impassioned Dreyfus, an existentialist philosopher at the University of California at Berkeley, has been in the forefront of the controversy over artificial intelligence. A small, wiry individual with tortoise-shell glasses and a brilliant shock of carrot-colored hair, Dreyfus approaches his role with the intensity of a crusader. He maintains that computers will never be able to think because scientists will never come up with a suitably rigorous set of rules to describe how we think. To many computer scientists, this is like saying the Earth is flat. But so far, none of them have been able to prove him wrong.

Even most AI researchers now admit that before they can make computers any smarter, they’ll have to come up with an explanation of how intelligence works in people. This realization has coincided with the emergence of cognitive science, a new discipline linking philosophy, psychology, anthropology, linguistics, neuroscience, and computer science in an attempt to develop a theory of the way humans think. The guiding principle of most cognitive science research is the notion that the mind, like the computer, is a system for manipulating symbols—for processing information. The task of cognitive science is to discover how this processing occurs.

At issue are the merits of two descriptions of reality—one experiential and intuitive, the other theoretical and mathematical. During the scientific revolution of the 17th century, philosophy gave rise to physics as a way of understanding the natural world through formal laws; now it is witnessing the birth of a new science that seeks to understand the mind through formal rules. Will the workings of the mind prove amenable to formal description? That turns out to be the real question. Hubert Dreyfus says they will not.

IN ATTACKING FORMALISM, Dreyfus is attacking not just the basic notion of cognitive science but one of the most powerful tools of modern thought. Formal reasoning involves reducing the real world to strings of symbols that can be manipulated according to explicitly stated rules. This kind of abstract thought is the basis of mathematics, logic, physics, and engineering. Space exploration would be inconceivable without it; so would computers and airplanes and nuclear missiles. The success of such enterprises has led scientists, mathematicians, and philosophers alike to wonder if there is any limit to the range of things that can be formalized. “Can all of reality be turned into a formal system?” computer scientist Douglas Hofstadter asked in Gödel, Escher, Bach. In a very broad sense, the answer might be yes.

Hofstadter was not the first to reach this conclusion. It was Plato who first raised the issue more than 2,300 years ago when he declared that all knowledge must be expressible as theory, as a set of explicit forms or definitions. The mathematician and philosopher Gottfried von Leibniz picked up the question in the 17th century when he set out to construct a “universal calculus” by which all of human reason could be reduced to a single mathematical notation. At the beginning of this century, the philosophers Bertrand Russell and Alfred North Whitehead succeeded in showing that logic could be expressed as a formal symbol system, although their claim that all of mathematics could be derived from it was disproved by mathematician Kurt Gödel’s Incompleteness Theorem. More recently, MIT linguist Noam Chomsky has argued that grammar, or the structure of language, also can be described according to formal principles.

If the mind really is a formal symbol-manipulating system, then what cognitive scientists have to do is figure out how it works. In arguing that this is misguided, Dreyfus is challenging the limits of scientific inquiry. Maybe if he were a mathematician, he could do to the field of cognitive science what Kurt Gödel did to the symbolic logic of Russell and Whitehead—demonstrate conclusively that its aims are unreachable. But that would require a formal proof, and according to Dreyfus no such proof can be constructed.

On the contrary, Dreyfus makes the case for intuition. He argues that perception, understanding, and learning are not just a matter of following discrete rules to compute a result; they’re holistic processes that make possible our status as human beings living and breathing and interacting in the world. This view is supported by recent psychological studies in categorization. Where do we draw the line between a cup and a vase? It depends on the context. How do we know that an A in Old English typeface is the same as an A in Times Roman typeface? Certainly not by counting serifs. If these really are intuitive processes, then Dreyfus is right: The quest for logical rules is a senseless one.

“Certainly there are some things that are formalizable,” says Robert Wilensky, the head of the AI lab at Berkeley, “and some things that resist it more and more. But where do you draw the line? And can you continue pushing the line further and further? Those are the interesting questions, and my real objection to Dreyfus is, why say at this stage that it’s going to fail?”

FROM DREYFUS’S POINT OF VIEW, a more reasonable question would be, Why assume that it’s going to work? Called upon to address that question at a 1982 cognitive science colloquium at Berkeley, Dreyfus—scrappy looking in corduroy pants and a Western shirt—faced an audience of scruffy grad students and faculty from behind a large plywood table. “Now the logic of this whole thing,” Dreyfus said, “is that there are a whole lot of phenomena in our experience—skills, moods, images, similarities, meanings—that don’t look like they’re amenable to formal analysis. And then there’s a lot of heavy going trying to show that they can be so analyzed anyway. And then a lot of research and a lot of counterexamples, and more research and more counterexamples, and so the question arises, why are people so busy trying? Why is formalism such an attractive option?

“I think that’s a fascinating question. I think you’ve got to go back to the beginning of the whole philosophical tradition to get any kind of answer to that, because the attraction of having nothing hidden, of having everything explicit, goes clear back to Plato. Plato saw the intellectual beauty of it; then, in 1600, Galileo showed the incredible power of it; and now, naturally, everybody would like to have a completely explicit, formalizable theory.”

During the question-and-answer exchange that followed, one student—an unabashed formalist—tried to compare Dreyfus to the cardinals of the Inquisition, those enemies of science who told Galileo the Earth couldn’t move around the sun because that was contrary to Scripture. People begin to titter, but Dreyfus was not even momentarily taken aback. In fact, the comparison—Dreyfus with the cardinals, the cognitive scientists with Galileo—was not as fanciful as it might sound.

It was Galileo—astronomer, physicist, mathematician—who laid the foundations for the scientific investigation of the physical world. He did this by combining experimentation with mathematical formalism and showing that mathematics, which had been thought a purely abstract discipline, could be applied to earthly reality. Mathematics provided a seductive tool—a language powerful enough to describe with precision what the experiments suggested. In order to use this tool, however, certain sacrifices had to be made: Subjective properties such as color, taste, and smell could not be described mathematically and were deemed to have little usefulness in describing the physical world.

Dreyfus makes the case for intuition. Perception and understanding, he says, aren’t just a matter of following rules.

The power of mathematics was sufficient to enable Newton to penetrate the mysteries of celestial motion and thus come up with a model of the universe. With his fundamental Laws of Mechanics, Newton provided the first modern scientific explanation of a universe that had always seemed mystical and veiled. He created a vision of a world existing in absolute space and absolute time, operating as a clocklike mechanism according to natural and immutable laws. God had set it all in motion, and now it was operating according to His plan. This is not, we know now, the world we live in—but it resembles the real world enough to have given Western humanity a sense, for more than two centuries at least, that reality was predictable and comprehensible.

This is what formal theory does: It postulates order in a seemingly random universe. It reassures us that our world is understandable and hence, in some deep sense, okay. But the mind is characterized by the very subjective properties that have been eliminated from formal descriptions in physics. And more important, in Dreyfus’s view, formalism leaves out situational properties like opportunities, risks, and threats that people experience in their everyday world. In attacking formal theories of the mind, Dreyfus is arguing that formal theory has reached its limits—that there are things about the way the mind works that theory will never be able to explain.

There was one way the comparison of Dreyfus with the cardinals didn’t hold up, however. In Rome, Galileo had been alone against a multitude of cardinals. At Berkeley, it was Dreyfus who was alone, facing a roomful of cognitive scientists. Times had changed.

DREYFUS’S QUARREL with the science of cognition began long before the new discipline was born. It began in the early ’60s, when he was a junior philosophy instructor at MIT. Some of his students were also students of Marvin Minsky, the founder of the artificial intelligence lab there, and they told Dreyfus that the philosophy of the mind he was teaching was out of date—that the problems of understanding and perception and so forth were about to be solved by AI people. Dreyfus felt that couldn’t be true.

As a follower of the 20th-century existential German philosopher Martin Heidegger, he believed that the mind operates not on the basis of internal rules but against a background of practices that define what it means to be a human on Earth. These include the skills and common-sense solutions people develop to cope with the everyday world. This shared social background is what Dreyfus thinks will forever resist formalization. Heidegger broke with the philosophical tradition that began with Plato—and as a Heideggerian, Dreyfus took the claims of the AI people as a challenge. If they were right, then his school of philosophy was wrong.

In 1972 Dreyfus published What Computers Can’t Do: The Limits of Artificial Intelligence, a scathing critique of the inflated claims and unrealistic expectations of the AI community. In it he took to task, for example, Herbert Simon of Carnegie-Mellon University, who had predicted in the late ’50s that a computer would be the world chess champion within a decade. This still hasn’t happened—although Richard Greenblatt, a legendary hacker at MIT, did succeed in building a chess-playing program called MacHack that beat Dreyfus in 1967 and that then went on to become an honorary member of the U.S. Chess Federation. Simon also predicted that computers would soon be able to handle any problem humans could. This hasn’t happened either, and Al researchers are at a loss to predict when it will—although many now put it in the hundreds of years.

The “artificial intelligentsia,” as Dreyfus called them, reacted to his assault with howls of outrage. Seymour Papert of MIT, one of the inventors of the children’s computer language LOGO, penned a reply he called “The Artificial Intelligence of Hubert L. Dreyfus.” Simon dismissed Dreyfus’s arguments as “garbage” in Machines Who Think, a history of the Al field by science writer Pamela McCorduck. But as time went on, a curious thing happened: Many Al people began to modify their position. They still complained bitterly at his hectoring tone, and he still ridiculed them at every opportunity. But no one could deny that to a certain extent he’d been right: Intelligent machines were not going to come rolling out of the factory tomorrow.

The turning point probably came with the failure of the microworlds approach, which was briefly popular in the AI community during the early 1970s. This tactic was typified by SHRDLU, a computer program that was built at MIT to demonstrate language comprehension by keeping track of toy blocks. Within the context of its blocks world, SHRDLU was remarkably intelligent: It could reason, communicate, understand, even learn (a new shape, for example). Outside the blocks world it was hopeless. Limited-domain programs such as this one were built with the idea that once you got them to function in some circumscribed environment, you could extend them to deal with the whole world. This turned out not to he the case.

The man who pointed this out most vigorously was computer scientist and psychologist Roger Schank of Yale University, a pioneering figure in cognitive science. Most Al people started out as mathematicians, and their aim was generally to build logical models of the brain. Schank was interested in building cognitive models—programs based on psychology rather than the principles of formal logic. Partly at his instigation, partly out of the realization that the microworlds approach wasn’t going to work, the focus of Al has shifted toward efforts at knowledge representation—figuring out how to organize data about the whole world, not just the blocks world, in a thoroughly explicit manner.

This is what philosophers have been trying to do for centuries, but Schank and other Al researchers feel that they have a technological edge. “All the issues that we’re interested in—intention and belief and language—those are all philosophical questions,” Schank admits. “The difference is that we have a tool, right? We have this computer. And what it does for us is it tests our theories.

“If you look at a philosopher’s theories, you’ll discover that they aren’t algorithmic at all. They step back and say, ‘Well, there are these general things going on.’ Computer scientists tend to get very antsy when they hear that, because they want to know what’s first and what’s second. But the computer shouldn’t be taken too seriously. What we’re interested in is building algorithms. What are the human algorithms? One way or another, humans have algorithms—and one way or another, I’d like to find out what they are.”

And Dreyfus’s argument that it’s an impossible task? “Oh, that’s just because he’s a mystic. I mean, look. What does he mean, ‘It’s not possible?’ There’s always somebody standing around saying ‘It’s not possible,’ so you do it.”

March 1, 1985

March 1, 1985