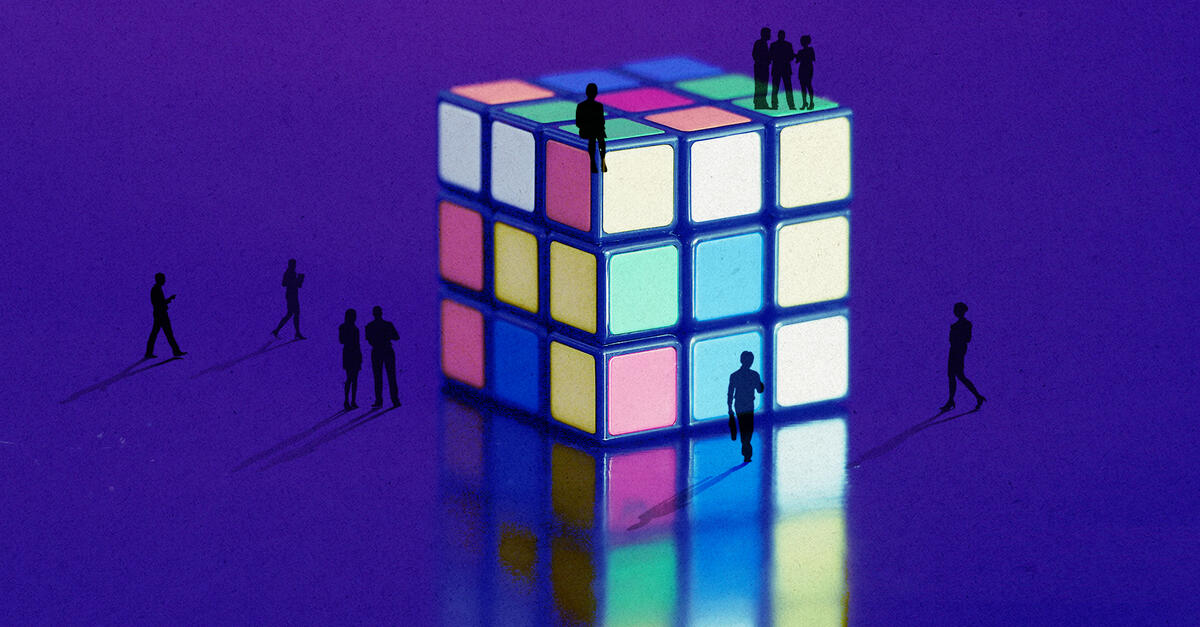

IF YOU’RE CONCERNED that you spend too much time worrying about the risk we face from bioengineered pathogens, maybe you should consider the likelihood that something else will get us first. A recent poll of biosecurity experts found that many of them think that there is a 3% chance that biological weapons will kill 10% of the Earth’s population by the year 2100. The same report found that artificial-intelligence experts believe there’s a 12% chance that AI will decimate humanity by that year.

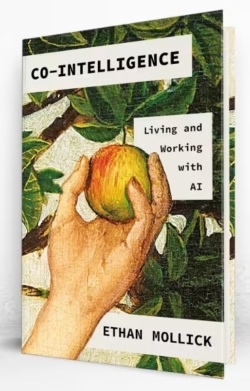

CO-INTELLIGENCE: Living and Working with AI, by Ethan Mollick. Portfolio, 256 pages

But 2100 is more than three-quarters of a century away. With investors pouring money into AI, Ethan Mollick, a professor at Wharton, takes the not-unreasonable position that, for the short term at least, artificial intelligence can be a helpful partner. Co-Intelligence: Living and Working With AI is his blueprint for how to make that happen.

Mr. Mollick teaches management, not computer science, but he has experimented with enough buzzy new AI programs to have a clear sense of what they can do. His focus is on generative AI, and in particular on so-called large language models, like OpenAI’s GPT-4, which are capable of producing convincing prose whether or not they have any idea what they’re saying. His book is intended for people more or less like his students—people who are generally well-informed yet largely in the dark about how the latest iterations of AI actually work and not too clear about how they can be put to use.

Books: Digital Life |

Against the StreamMood Machine, by Liz PellyThe Wall Street Journal | Jan. 26, 2025 |

Learning to Live With AICo-intelligence, by Ethan MollickThe Wall Street Journal | April 3, 2024 |

Swept Away by the StreamBinge Times, by Dade Hayes and Dawn ChmielewskiThe Wall Street Journal | April 22, 2022 |

After the DisruptionSystem Error, by Rob Reich, Mehran Sahami and Jeremy WeinsteinThe Wall Street Journal | Sept. 23, 2021 |

The New Big BrotherThe Age of Surveillance Capitalism, by Shoshana ZuboffThe Wall Street Journal | Jan. 14, 2019 |

The Promise of Virtual RealityDawn of the New Everything, by Jaron Lanier; Experience on Demand, by Jeremy BailensonThe Wall Street Journal | Feb. 6, 2018 |

When Machines Run AmokLife 3.0, by Max TegmarkThe Wall Street Journal | Aug. 29, 2017 |

The World’s Hottest GadgetThe One Device, by Brian MerchantThe Wall Street Journal | June 30, 2017 |

Soft Skills and Hard ProblemsThe Fuzzy and the Techie, by Scott Hartley; Sensemaking, by Christian MadsbjergThe Wall Street Journal | May 27, 2017 |

Confronting the End of PrivacyData for the People, by Andreas Weigend; The Aisles Have Eyes, by Joseph TurowThe Wall Street Journal | Feb. 1, 2017 |

We’re All Cord Cutters NowStreaming, Sharing, Stealing, by Michael D. Smith and Rahul TelangThe Wall Street Journal | Sept. 7, 2016 |

Augmented Urban RealityThe City of Tomorrow, by Carlo Ratti and Matthew ClaudelThe New Yorker | July 29, 2016 |

Word Travels FastWriting on the Wall, by Tom StandageThe New York Times Book Review | Nov. 3, 2013 |

April 3, 2024

April 3, 2024